napp-it SE Solaris/Illumos Edition

- no support

- commercial use allowed

- no capacity limit

- free download

napp-it cs client server

- home use< (3x free)

- commercial use (1x free)

- free download

napp-it SE und cs

- Individual support and consulting

- Bugfix/ Updates to newest releases and bugfixes

- Redistribution/Bundling/Installation on demand allowed

Details: Featuresheet.pdf

Concept: What Hardware

- Minimum: Mainboard with 64 Bit CPU, 2 GB RAM, onboard Sata (AHCI) for a barebone storage server (example HP Microserver)

not suitable for all-in-one due to missing vt-d (pass-through, needed for storage server virtualisation) - Best: Server Class Mainboard with Intel server-chipset, vt-d, Intel NIC, ECC RAM, min 3 x fast PCI-e slots, as much RAM as you can affort

- My favorite: SuperMicro Mainboards serie X9...-F (from 150 Euro), i5 or Xeon with LSI 9211 HBA Disk Controller (200 Euro)

example: X9-SCL* -F or X8-DTH-6F (use the -F models with KVM, you will not regret the 30 Euro premium) - use a Sata (single or mirrored) OS-disk, best a 30 GB+ SSD (I prefer the Intel 320 serie)

- If you need more disk ports: Use LSI SAS2 HBA controller example based on LSI LSI 2008 (best with IT firmware)

like a LSI 9211-8i or cheaper quite compatible models like IBM 1015 or a 9201-16i with 16 Ports.

avoid: (trust me): USB boot disks (not working or too slow) or Hardware-Raid-Controller (those with Raid-5/6 and battery) or Realtek Nics

What OS for napp-it

- OmniOS, free, similar to OI server edition but stable release every 6 months, bi-weekly updates and optionally with paid support

- OpenIndiana: For server and desktop use, (I use desktop version also for a server due to timeslider and easy to use), free

- Illumian: Base for NexentaStor 4, think of it like a OpenIndiana text installation with Debian like packaging, free

read: The Rise and Development of Illumos (Illumos=Kernelproject with base tools for Illumian, OpenIndiana, SmartOS and others) - Solaris 11: only free for developer and trial use - if you need commercial OS support

Case

- 19" from SuperMicro, Chenbro or Norco, hotpluggable

- avoid expander when not needed, if needed prefer SAS disks

- expect growing storage needs, look for enough free bays

Pool Layout

Pool-Layout is always a compromise between capacity, cost, data security and performance. Some basic rules are

- Pools are build from one or more vdevs (independent Raid-sets). ZFS stripes data over these vdevs in a Raid-0 manner

- Best is to keep these vdevs similar in Raid-level, size and performance

- With high capacity disks, prefer Raid-sets than can allow a two-disk failure (3way mirror, Raid-Z2, Raid-Z3)

- I/O Performance of a pool scales over number of vdevs, sequential performance over number of datadisks

ZFS Software-Raid-Levels

- Basic (no Raid), can be used to build Raid-0 like pools without redundancy or single-disk mediapools with SnapRaid for backups

- Mirror (2way, 3way), best I/0, best expandability, prefer this with databases or ESXi datastores and as much vdevs as possible.

- Raid-Z1, similar Raid-5, highest capacity, lowest cost, do not use with more than 5 disks or high capacity disks due to long rebuild time.

- Raid-Z2, similar Raid-6, allows two disk to fail, mostly used with 6-10 disks per vdev.

- Raid-Z3, allows three disks to fail in this vdev, mostly used with 11-19 disks per vdev.

ARC (read cache) and ZIL (write-log)

Any Solaris type OS should have at least 2 GB RAM. If you do not have more, Performance is mostly limited to pure low disk performance. If you add more RAM, this is mostly used to cache reads (ARC). With enough RAM you can achieve that more than 80% of all reads are delivered from RAM without delay. This is why you can say: ZFS read performance scales mostly over RAM. If your RAM is limited, you may add an SSD as an addtional Read-Cache (2nd level ARC). This can improve read performance but not as much as more RAM (RAM is much faster than an SSD)

On writes, you will notice, that Solaris collects all data that needs to be written to disk for about 5s in RAM and writes them then in one large sequential write to disk. This multiple small random write to one large sequential write conversion is the reason why ZFS is ultra-fast, even with small random writes. The Problem: On a power failure up to last 5s of (commited) writes are lost. Although this does not affect ZFS filesystem-constistency, this can be a real problem with databases of ESXi virtual machines that can become corrupted. In such situations, ZFS allows use of sync-write where each single write command must be commited to disk until the next one can occur. This improves data security enourmously but can lower disk performance down to 1/100 of the value without sync write activated especially when used in a multi-tasking situation with small randow writes. To overcome this situation, ZFS offers to log all writes to a dedicated log-device (ZIL) from which data can be revovered in case of a power outage. Write performance is then mostly limited with I/O and latency of this Log-device. While you can never reach the write values of unsync-writes, it can improve write performance on slow disks by a factor of 10 when combined with an SSD and a factor of 100 with DRAM based Logdevices like a ZEUSRAM.

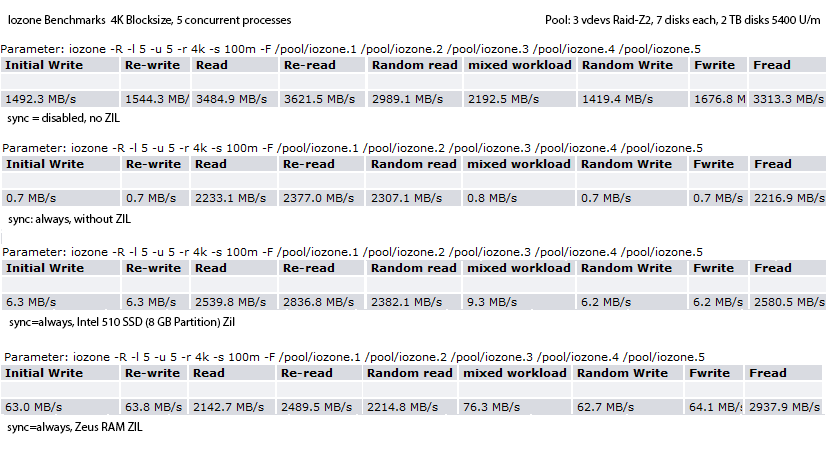

Example 1: Sync writes values on a Pool of green disks (small 4k writes, 5 concurrent processes)

As you see, the regular unsync write performance of 1544 Mbyte/s lowers to 0,7 Mbyte/s (disks are slow green disks)

When a SSD ZIL (8 GB partition of a larger MLC SSD) is used, you achieve 6,3 MB/s, what goes up to 63 MB/s with a DRAM based ZeusRAM

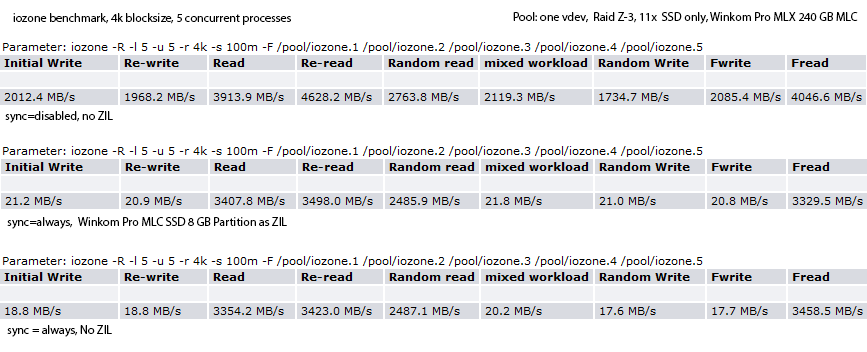

Example 2: Sync writes values on an SSD only Pool (small 4k writes, 5 concurrent processes)

With SSD only pools, sync-values are much better even without a dedicated ZIL but I would suggest a dedicated ZIL too. It improves values a little with an SSD based ZIL (DRAM based ones are much better) but lowers amount of small random writes that is something that lowers performance and durability of MLC SSDs.

| Projekte:

| Projekte: